3 Ridiculous Rules About Deepseek Ai

페이지 정보

본문

It will final so lengthy as policy is shortly being enacted to steer AI, however hopefully, it won’t be endlessly. When there’s an innovative technology that’s useful to the final population and it’s reasonably priced, people will use it, said Vic Shao, founding father of DC Grid, which delivers off-grid, direct current energy to knowledge centers and electric car charging stations. Comparing this to the previous general score graph we are able to clearly see an improvement to the overall ceiling issues of benchmarks. The Department of Justice and multiple state attorneys normal sued Google for violating antitrust laws to dominate the search market (and won.) In addition they sued Google’s internet marketing market and count on a call quickly. According to The Wall Street Journal, Google engineers had built a generative AI chatbot over two years earlier than OpenAI unveiled ChatGPT. On average, conversations with Pi final 33 minutes, with one in ten lasting over an hour every day. In a joint submission with CoreWeave and NVIDIA, the cluster completed the reference training activity for large language fashions in just eleven minutes, solidifying its position because the fastest cluster on this benchmark.

It will final so lengthy as policy is shortly being enacted to steer AI, however hopefully, it won’t be endlessly. When there’s an innovative technology that’s useful to the final population and it’s reasonably priced, people will use it, said Vic Shao, founding father of DC Grid, which delivers off-grid, direct current energy to knowledge centers and electric car charging stations. Comparing this to the previous general score graph we are able to clearly see an improvement to the overall ceiling issues of benchmarks. The Department of Justice and multiple state attorneys normal sued Google for violating antitrust laws to dominate the search market (and won.) In addition they sued Google’s internet marketing market and count on a call quickly. According to The Wall Street Journal, Google engineers had built a generative AI chatbot over two years earlier than OpenAI unveiled ChatGPT. On average, conversations with Pi final 33 minutes, with one in ten lasting over an hour every day. In a joint submission with CoreWeave and NVIDIA, the cluster completed the reference training activity for large language fashions in just eleven minutes, solidifying its position because the fastest cluster on this benchmark.

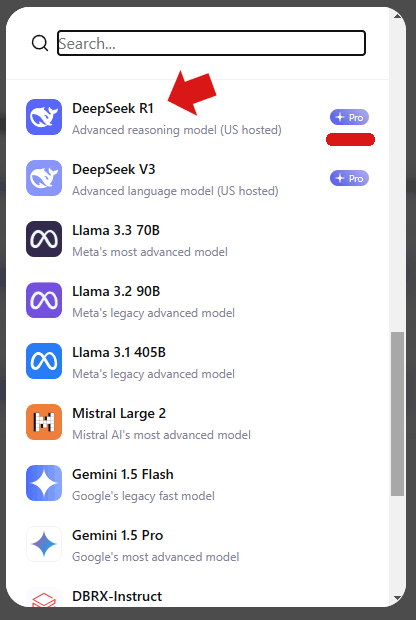

If DeepSeek V3, or the same model, was released with full training data and code, as a real open-source language mannequin, then the fee numbers can be true on their face worth. Building on evaluation quicksand - why evaluations are at all times the Achilles’ heel when coaching language models and what the open-supply community can do to enhance the state of affairs. Combine this with its use of below-powered Nvidia chips designed for the Chinese market and you can see why it is making waves. DeepSeek AI, a Chinese AI research lab, has been making waves in the open-supply AI neighborhood. Leading analysts have been poring by means of the startup’s public research papers about its new mannequin, R1, and its precursors. ★ Model merging lessons in the Waifu Research Department - an overview of what model merging is, why it works, and the unexpected teams of individuals pushing its limits. How RLHF works, part 2: A skinny line between helpful and lobotomized - the importance of style in publish-training (the precursor to this post on GPT-4o-mini). I stated, "I want it to rewrite this." I stated, "Write a 250-word weblog put up concerning the importance of e mail record hygiene for B2B marketers. Inflection AI's visionary strategy extends past mere mannequin growth, as the company acknowledges the significance of pre-training and high quality-tuning in creating high-high quality, protected, and helpful AI experiences.

If DeepSeek V3, or the same model, was released with full training data and code, as a real open-source language mannequin, then the fee numbers can be true on their face worth. Building on evaluation quicksand - why evaluations are at all times the Achilles’ heel when coaching language models and what the open-supply community can do to enhance the state of affairs. Combine this with its use of below-powered Nvidia chips designed for the Chinese market and you can see why it is making waves. DeepSeek AI, a Chinese AI research lab, has been making waves in the open-supply AI neighborhood. Leading analysts have been poring by means of the startup’s public research papers about its new mannequin, R1, and its precursors. ★ Model merging lessons in the Waifu Research Department - an overview of what model merging is, why it works, and the unexpected teams of individuals pushing its limits. How RLHF works, part 2: A skinny line between helpful and lobotomized - the importance of style in publish-training (the precursor to this post on GPT-4o-mini). I stated, "I want it to rewrite this." I stated, "Write a 250-word weblog put up concerning the importance of e mail record hygiene for B2B marketers. Inflection AI's visionary strategy extends past mere mannequin growth, as the company acknowledges the significance of pre-training and high quality-tuning in creating high-high quality, protected, and helpful AI experiences.

As you possibly can see from the table above, DeepSeek-V3 posted state-of-the-artwork ends in 9 benchmarks-the most for any comparable mannequin of its size. If DeepSeek can get the identical outcomes on less than a tenth of the event finances, all those billions don’t appear to be such a sure guess. DeepSeek has also made significant progress on Multi-head Latent Attention (MLA) and Mixture-of-Experts, two technical designs that make DeepSeek models more price-effective by requiring fewer computing resources to practice. DeepSeek Chat claimed it used just over 2,000 Nvidia H800 chips and spent just $5.6 million (€5.24 million) to prepare a mannequin with greater than 600 billion parameters. Coding and Mathematics Prowess Inflection-2.5 shines in coding and arithmetic, demonstrating over a 10% enchancment on Inflection-1 on Big-Bench-Hard, a subset of difficult issues for big language models. Italy has banned the platform over knowledge-switch risks, whereas Belgium and Ireland launched privateness probes. While much of the progress has happened behind closed doors in frontier labs, we have seen quite a lot of effort within the open to replicate these results.

Regardless that these models are on the highest of the Open LLM Leaderboard, plenty of researchers have been declaring that it's simply because of the evaluation metrics used for benchmarking. Big U.S. tech firms are investing hundreds of billions of dollars into AI expertise. However the growing variety of open supply fashions indicates that China does not likely rely on US expertise to additional its AI subject. Across technology broadly, AI was nonetheless the most important story of the 12 months, because it was for 2022 and 2023 as nicely. Ahead of the Lunar New Year, three different Chinese labs introduced AI fashions they claimed could match-even surpass-OpenAI’s o1 performance on key benchmarks. Inflection AI's dedication to transparency and reproducibility is evident in the release of a technical memo detailing the evaluation and efficiency of Inflection-1 on varied benchmarks. Then, the latent part is what Free DeepSeek launched for the DeepSeek V2 paper, where the mannequin saves on reminiscence usage of the KV cache through the use of a low rank projection of the attention heads (on the potential value of modeling efficiency). Chinese firms and government laboratories are robust in high performance computing and particularly on efficient high performance AI computing.

- 이전글Cat Flap Cover For Winter 25.02.18

- 다음글Affordable Pellet Stoves Tools To Ease Your Daily Life Affordable Pellet Stoves Trick That Everybody Should Be Able To 25.02.18

댓글목록

등록된 댓글이 없습니다.